February in review

Here are some of the interesting links I found while browsing the interweb lately.

Chloé-Agathe Azencott released the second edition of her textbook on Machine Learning (in French): Introduction au Machine Learning (PDF, 180 pp.). I went through the book quickly, but I plan to come back to it later. With Philippe Besse’s books, I think they are the only books I have read in French on machine learning — and it’s quite relaxing actually.

Lessons learned from writing ShellCheck, GitHub’s now most starred Haskell project. Interesting blog post on ShellCheck, “a shell script linter, (that) actually started life in 2012 as an IRC bot (of all things!) on #bash@Freenode.” This is what I use in Emacs for shell script since Flycheck fully supports it. I didn’t know ShellCheck was written in Haskell, though. The author notices that Haskell has an undeniably high barrier to entry for the uninitiated, and ShellCheck’s target audience is not Haskell developers; and that the Haskell ecosystem moves and breaks very quickly. New changes would frequently break on older platform versions.

JuliaLang: The Ingredients for a Composable Programming Language. I reinstalled Julia 1.x recently and I hope to find some time to experiment with it a little at some point. I’ve played with versions 0.4 and 0.6 some years ago, but the growing list of changes from one version to the next, notwithstanding the incompatibility issues encountered here and there between v 0.6 and 0.7, had caused me to abandon the idea of working with it at a professional level. This blog post discusses why developing a package is better and easier or safer than writing local modules, and how Julia’s combination of duck-typing with multiple dispatch happens to be very powerful.

Whenever I have to write a quick Haskell script, I use a shebang like this:

#! /usr/bin/env stack -- stack --resolver lts-13.26 scriptNow I learn that there is stack script. Great!

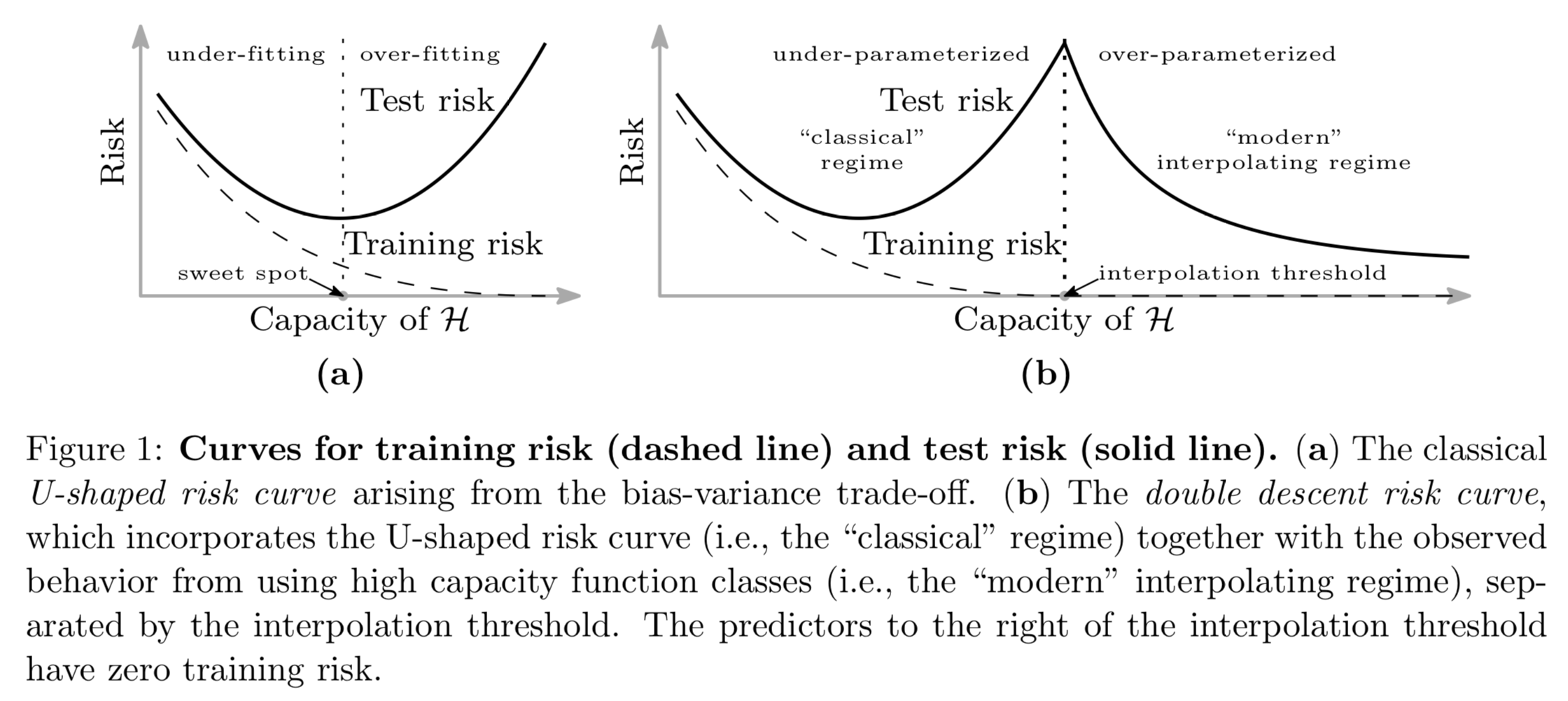

Reconciling modern machine learning practice and the bias-variance trade-off, or how increasing model capacity beyond the point of interpolation results in improved performance. Nice read!

Recently, I heard about loky for Python asynchronous processing. In the meantime, I’m still learning how to use the multiprocessing package correctly, but TBH I found numba @jit decorator so much powerful for simple tasks (e.g., parallelizing simple “for” loops and the like) that I’m quite happy with what I have for now.

I’m not familiar with state-space models, but I came across the following review thanks to Gavin Simpson: An introduction to state-space modeling of ecological time series.