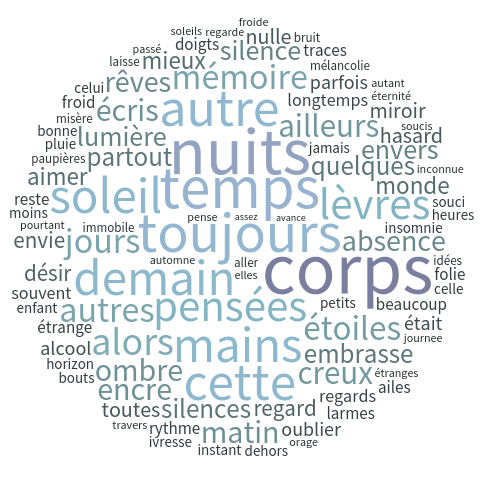

Life in a few words

Just out of curiosity, I made a quick wordcloud using Mathematica with very few normalization (capital letters, punctuation characters, stop words, short words, etc.) to summarize some personal stuff I wrote during the last 15 years or so, and I thought I will just put it right there.

My very basic script is shown below: (More solutions are available on Stack Exchange.)

data = Import["~/Documents/home/writings/combined.txt"];

junk = {"comme", "quand", "enfin", "lorsque", "après", "jusqu",

"sinon", "aujourd", "autour", "entre", "aussi", "toute", "petit",

"avoir", "encore", "aurais", "étais", "avais", "juste", "leurs",

"parce"};

cleanedData =

StringReplace[#, RegularExpression[

"\\b[0-9]+[/-][0-9]+([/-][0-9]+)?\\b"] -> ""] & /@

DeleteStopwords[ToLowerCase[TextWords[data]]];

cleanedData =

Select[Flatten[

StringSplit[

StringReplace[cleanedData, PunctuationCharacter -> " "]]],

StringLength[#] > 4 && # != "comme" &];

cleanedData = DeleteCases[cleanedData, x_ /; MemberQ[junk, x]];

WordCloud[cleanedData, Disk[],

ColorFunction -> ColorData["AtlanticColors"]]

The sieve for junk words was quickly devised by trial and error. Nothing’s perfect to be expected.

♪ Bod Dylan • Changing of the Guards